History

Not all age-old occupations are extinct. While we no longer need the knocker-up to make sure we are awake for work, due to the invention of the alarm clock, there will always be scavengers out there looking for lost treasure. Most of them probably don’t scavenge in the mud, but some do. It is a hobby that lots of people enjoy, but not exactly an occupation anymore. Nevertheless, there is money to be made doing it.

Not all age-old occupations are extinct. While we no longer need the knocker-up to make sure we are awake for work, due to the invention of the alarm clock, there will always be scavengers out there looking for lost treasure. Most of them probably don’t scavenge in the mud, but some do. It is a hobby that lots of people enjoy, but not exactly an occupation anymore. Nevertheless, there is money to be made doing it.

Originally, Mudlarking…or the scavenging of the river mud for items of value, was principally undertaken in London, along the river Thames. Most commonly, it was young boys from the 1700s to the early 1900s. While mudlarking would be a suitable way for young boys to make some money, the infatuation would not likely last very long. They could help their family make ends meet, it was no get rich quick endeavor. These days, mudlarking is simply a leisure activity.

In March 1904 author Dorothy Menpes wrote about London’s Mudlarks, her work published in the Batley  Reporter and Guardian, “Among London children are the mud-larks, the small scavengers of the Thames, generally clothed in scanty jackets, sunshine, and mud, wriggling unceasingly in and out of chains and anchors, and curling round the figureheads of the barges, never for one moment still, slippery and elusive as eels. They are very mischievous sprites, these children, always appearing where you least expect them, always ferreting about with those terribly keen black eyes of theirs, and frequently swooping down like seagulls upon some hidden treasure embedded in the mud; and their chief anxiety seems to consist in evading the Thames police. The Mudlarks of the Thames were a unique community, with a ‘language of their own,'” as Menpes details, “…it is a special mud-lark patois, and appears to be a mixture of the swear-words of sailors and the slang of landsmen. There are generally fights going on among these urchins for the possession of some treasure; and their constant enemies are the bargees, whom they delight to cheat and annoy by clinging to ropes and chains, thereby getting a ride gratis, as a street boy does on a Putney bus.”

Reporter and Guardian, “Among London children are the mud-larks, the small scavengers of the Thames, generally clothed in scanty jackets, sunshine, and mud, wriggling unceasingly in and out of chains and anchors, and curling round the figureheads of the barges, never for one moment still, slippery and elusive as eels. They are very mischievous sprites, these children, always appearing where you least expect them, always ferreting about with those terribly keen black eyes of theirs, and frequently swooping down like seagulls upon some hidden treasure embedded in the mud; and their chief anxiety seems to consist in evading the Thames police. The Mudlarks of the Thames were a unique community, with a ‘language of their own,'” as Menpes details, “…it is a special mud-lark patois, and appears to be a mixture of the swear-words of sailors and the slang of landsmen. There are generally fights going on among these urchins for the possession of some treasure; and their constant enemies are the bargees, whom they delight to cheat and annoy by clinging to ropes and chains, thereby getting a ride gratis, as a street boy does on a Putney bus.”

They would have to dig around in the mud looking for their treasures, because back then, they didn’t have

things like metal detectors. The Mudlarks (or scavengers) of today can simply walk along the beach, their yard, or the trail they are walking on to find possible treasures with very little effort. I suppose that the Mudlarks of old might say that the scavengers of today cheat to find their treasure, but as technology comes about, the game changes. It’s just the name of the game.

things like metal detectors. The Mudlarks (or scavengers) of today can simply walk along the beach, their yard, or the trail they are walking on to find possible treasures with very little effort. I suppose that the Mudlarks of old might say that the scavengers of today cheat to find their treasure, but as technology comes about, the game changes. It’s just the name of the game.

In the realm of “odd jobs,” we find that some jobs were not just odd, some were downright cruel. I know that some of the Egyptian slaves were covered in honey, so the flies bothered them and not Pharoah. Of course, that was not really an occupation, because they were slaves. I’m sure there were a number of other things slaves were subjected to that were equally as cruel, and they just had no say in the matter.

In the realm of “odd jobs,” we find that some jobs were not just odd, some were downright cruel. I know that some of the Egyptian slaves were covered in honey, so the flies bothered them and not Pharoah. Of course, that was not really an occupation, because they were slaves. I’m sure there were a number of other things slaves were subjected to that were equally as cruel, and they just had no say in the matter.

In the world of monarchies of the past, while people weren’t slaves, there were a number of odd occupations. I simply can’t imagine choosing the occupation of “whipping boy.” Apparently, it was thought, in times past, that while princes needed discipline, and the discipline of choice in the past was spanking, one should never spank a prince. So, when a prince needed discipline for fracturing the rules, instead of  the naughty royal, there was a “whipping boy,” who had the unfortunate job of stepping up to receive the punishment for the prince.

the naughty royal, there was a “whipping boy,” who had the unfortunate job of stepping up to receive the punishment for the prince.

Now, I don’t know about you, but if I was going to have that job, I would want to be well compensated, because I’m not real fond of spankings, and since the prince wasn’t getting the spanking, who knows how hard the spanking was. I suppose it was dependent on what rule was broken, but since I wouldn’t have broken said rule, I wouldn’t be really happy about any punishment. To make matters worse, what if the prince simply enjoyed seeing the whipping boy get spanked? Some princes, have a tendency to feel entitled, and might enjoy watching someone get punished for something the prince did. It’s really not a position anyone wants to find themselves in…especially if they weren’t given any choice in the matter.

The whipping boy had the advantage of being educated alongside the prince, so I suppose that was one good thing. The boys most likely became friends, so the whipping boy could hope that seeing a friend punished would provide an equivalent motivation for the prince not to repeat the offence. Whipping was a common punishment administered by tutors in early to modern Europe. This was not a highly documented occupation, and some princes were indeed whipped by their tutors, although some people suggest that nobles might have been beaten less often than other pupils. Some historians don’t even believe that such an occupation existed either. Others believe that if it does exist, the use of a whipping boy was only applied only in the case of a boy king, protected by divine right, and not to mere princes. Whatever the case may be, I don’t think “whipping boy” would be an occupation I would want to have, nor would I want it for my child.

With the election of Thomas Jefferson as third president, on February 17, 1801, came the first peaceful transfer of power from one political party to another in the United States. Nevertheless, the election was an unusual one. By this time, Jefferson had helped to draft the Declaration of Independence, had served in two Continental Congresses, as minister to France, as secretary of state under George Washington and as John Adams’ vice president. These credentials probably made him the best person for the job in the entire world.

With the election of Thomas Jefferson as third president, on February 17, 1801, came the first peaceful transfer of power from one political party to another in the United States. Nevertheless, the election was an unusual one. By this time, Jefferson had helped to draft the Declaration of Independence, had served in two Continental Congresses, as minister to France, as secretary of state under George Washington and as John Adams’ vice president. These credentials probably made him the best person for the job in the entire world.

While it was obvious that Jefferson was the best man for the job, vicious partisan warfare was the name of the game during the campaign of 1800 between Democratic-Republicans Jefferson and Aaron Burr and Federalists John Adams, Charles C Pinckney and John Jay. The ongoing battle raged between Democratic-Republican supporters of the French, who were involved in their own bloody revolution, and the pro-British Federalists who wanted to implement English-style policies in American government. The Federalists hated the French revolutionaries because of their overzealous use of the guillotine and, as a result, were less forgiving in their foreign policy toward the French. They pushed for a strong centralized government, a standing military, and financial support of emerging industries.

Jefferson’s Democratic-Republicans, on the other hand, preferred limited government, complete and absolute states’ rights and a primarily agricultural economy. They feared that Federalists would abandon revolutionary ideals and revert to the English monarchical tradition. When Jefferson was secretary of state under Washington, he opposed Secretary of the Treasury Hamilton’s proposal to increase military expenditures and resigned when Washington supported the leading Federalist’s plan for a national bank.

A bloodless, but ugly campaign ensued, in which candidates and influential supporters on both sides used the press, often anonymously, as a forum to fire slanderous volleys at each other. It sounds a lot like some of our election campaigns of today. Then came the laborious and confusing process of voting, that began in April 1800. Individual states scheduled elections at different times, which I think further confuses the situation, and although Jefferson and Burr ran on the same ticket, as president and vice president respectively, the Constitution still demanded votes for each individual to be counted separately. As a result, by the end of January 1801, Jefferson and Burr emerged tied at 73 electoral votes apiece. Adams came in third at 65 votes. While that left Adams out, it left a tie for Jefferson and Burr. The result created a big problem.

The resulting tie sent the final vote to the House of Representatives. That would not make for an easy decision e ither. A number of those in the Federalist-controlled House of Representatives insisted on following the Constitution’s flawed rules and refused to elect Jefferson and Burr together on the same ticket. The highly influential Federalist Alexander Hamilton, who mistrusted Jefferson, but hated Burr more, persuaded the House to vote against Burr, whom he called the most unfit man for the office of president. Of course, that cause a hatred between Hamilton and Burr that led Burr to challenge Hamilton to a duel in 1804. Burr won the duel when he killed Hamilton. Two weeks before the scheduled inauguration, Jefferson emerged victorious, and Burr was confirmed as his vice president. It was the first of only two times the presidency has been decided by the House of Representatives.

ither. A number of those in the Federalist-controlled House of Representatives insisted on following the Constitution’s flawed rules and refused to elect Jefferson and Burr together on the same ticket. The highly influential Federalist Alexander Hamilton, who mistrusted Jefferson, but hated Burr more, persuaded the House to vote against Burr, whom he called the most unfit man for the office of president. Of course, that cause a hatred between Hamilton and Burr that led Burr to challenge Hamilton to a duel in 1804. Burr won the duel when he killed Hamilton. Two weeks before the scheduled inauguration, Jefferson emerged victorious, and Burr was confirmed as his vice president. It was the first of only two times the presidency has been decided by the House of Representatives.

As evening arrives, and the streets grow dark, the streetlights begin to light up. When we go out to drive in the nighttime, we take for granted that the streets won’t be quite as dark as the night, because the streetlights will add a little bit of light to show you where the roads, corners, and even pedestrians are. We take all that for granted, but it wasn’t always that way. Electric streetlights came into being in Paris in 1881, but it would be quite a while before all the towns and cities of the world would have them.

As evening arrives, and the streets grow dark, the streetlights begin to light up. When we go out to drive in the nighttime, we take for granted that the streets won’t be quite as dark as the night, because the streetlights will add a little bit of light to show you where the roads, corners, and even pedestrians are. We take all that for granted, but it wasn’t always that way. Electric streetlights came into being in Paris in 1881, but it would be quite a while before all the towns and cities of the world would have them.

That brings us to the now non-existent occupation of the lamplighter. It sound self-explanatory profession from its name, but there was much more to it than simply lighting a lamp at night. Newspapers from the past can better explain the actual job of the lamplighter. When advertisements were posted for the job of lamplighter, such as the “Public Notice” which appeared in the Beeston Gazette and Echo, July 1928: “Applications are invited for the post of Lamplighter for the ensuing Lighting period. The person appointed will be required to light, extinguish, and clean the lamps. Wages £2 per week.” The Beeston Gazette and Echo of July 21, 1928, went on to say, “And so, as a lamplighter, you would be required to light lamps at dusk, extinguish them again, whilst also keeping them in good repair. This  particular position, for the parish of Gedling in Nottinghamshire, would pay £2 a week.” That would be approximately £91 ($114.64 US) in today’s money. No, the pay wasn’t great then or now, but in small towns it probably wouldn’t take long to do most of the work. Still, maintenance and cleaning added to the work.

particular position, for the parish of Gedling in Nottinghamshire, would pay £2 a week.” That would be approximately £91 ($114.64 US) in today’s money. No, the pay wasn’t great then or now, but in small towns it probably wouldn’t take long to do most of the work. Still, maintenance and cleaning added to the work.

Also, remember that the job was year-round and in every season. It wouldn’t make sense to ride a bicycle, or these days, a car, take a horse or any other vehicle to go from corner to corner lighting the lamps. So they walked, and walked!! One lamplighter named John Maher, recorded a record of 150,000 miles. His story was told in the Prescot Reporter, and Saint Helens General Advertiser. John Maher is described as one of the “unsung heroes” of Saint Helens, “whose presence in the community is taken for granted and whose necessity is rarely proclaimed.” Prescot Reporter and St. Helens General Advertiser went on to say on April 21, 1939, “For 43 years Maher has ‘been pounding the pavements of St Helens,’ during which it was estimated that he covered  150,000 miles, in all weathers. At the age of 64, the lamplighter had…worked every hour in the day. His day commenced just before dawn and ended after sundown. He also worked four hours during the interim period cleaning, and as the times of dawn and sunset are always changing, Mr. Maher has to change the times of his going to work accordingly.”

150,000 miles, in all weathers. At the age of 64, the lamplighter had…worked every hour in the day. His day commenced just before dawn and ended after sundown. He also worked four hours during the interim period cleaning, and as the times of dawn and sunset are always changing, Mr. Maher has to change the times of his going to work accordingly.”

The life of a lamplighter was a hard one once described like this, “I may say that as a lamp-lighter I am exposed to all weathers and have a lot of broken rest and excessive walking. Everybody notices the great improvement in me since I took Phosferine. I continue the remedy regularly twice a day, and I would not be without it on any account, as I consider it has given me a new lease of life.” Apparently, the job was so hard that many lamplighters took some sort of medicine to make it through their days. Clearly, we can consider ourselves blessed to have electric streetlights these days.

MV Joyita, an American merchant vessel, was acquired by the United States Navy in October of 1941. She was not a big ship and only ran with a crew of 25 men. Joyita was a 69-foot wooden ship built in 1931 as a luxury yacht by the Wilmington Boat Works in Los Angeles. She was originally built for movie director Roland West, who named the ship for his wife, actress Jewel Carmen. The word “joyita” in Spanish meaning “little jewel.” The ship’s hull was constructed of 2-inch-thick cedar on oak frames. She was 69 feet long, with beam of 17 feet and a draft of 7 feet 6 inches. her net tonnage was 47 tons and her gross tonnage approximately 70 tons. She had tanks for 2,500 US gallons of water and 3,000 US gallons of diesel fuel. In 1936 West sold the ship to Milton E Beacon. During this period, she made numerous trips south to Mexico and to the 1939–1940 Golden Gate International Exposition in San Francisco. During part of this time, Chester Mills was the captain of the vessel.

MV Joyita, an American merchant vessel, was acquired by the United States Navy in October of 1941. She was not a big ship and only ran with a crew of 25 men. Joyita was a 69-foot wooden ship built in 1931 as a luxury yacht by the Wilmington Boat Works in Los Angeles. She was originally built for movie director Roland West, who named the ship for his wife, actress Jewel Carmen. The word “joyita” in Spanish meaning “little jewel.” The ship’s hull was constructed of 2-inch-thick cedar on oak frames. She was 69 feet long, with beam of 17 feet and a draft of 7 feet 6 inches. her net tonnage was 47 tons and her gross tonnage approximately 70 tons. She had tanks for 2,500 US gallons of water and 3,000 US gallons of diesel fuel. In 1936 West sold the ship to Milton E Beacon. During this period, she made numerous trips south to Mexico and to the 1939–1940 Golden Gate International Exposition in San Francisco. During part of this time, Chester Mills was the captain of the vessel.

In October 1941, two months before the attack on Pearl Harbor, Joyita was acquired by the United States Navy and taken to Pearl Harbor, Hawaii, where she was outfitted as yard patrol boat YP-108. The Navy used her to patrol the Big Island of Hawaii until the end of World War II. In 1943 she ran aground and was heavily damaged, but the Navy was badly in need of ships, so she was repaired. At this point, new pipework was made from galvanized iron instead of copper or brass. In 1946, the ship was surplus to Navy requirements and most of her equipment was removed.

She was decommissioned and in 1948, Joyita was sold to the firm of Louis Brothers. They added a cork lining was added to the ship’s hull along with refrigeration equipment. The ship also had two Gray Marine diesel engines providing 225 horsepower, and two extra diesel engines for generators. Joyita was sold to William Tavares in 1950, but he decided he didn’t really need it, so he sold it in 1952 to Dr Katharine Luomala, a professor at the University of Hawaii. Luomala chartered the boat to her friend, Captain Thomas H “Dusty” Miller, a British-born sailor living in Samoa.

Miller used the ship as a trading and fishing charter boat. About 5:00 AM on October 3, 1955, Joyita left Samoa’s Apia harbor bound for the Tokelau Islands, about 270 miles away. They got a late start, because her port engine clutch failed. Joyita eventually left Samoa on one engine. She was carrying sixteen crew members and nine passengers, including a government official, a doctor (Alfred “Andy” Denis Parsons, a World War II  surgeon on his way to perform an amputation), a copra buyer, and two children. Her cargo consisted of medical supplies, timber, 80 empty 45-gallon oil drums, and various foodstuffs. Joyita was scheduled to arrive in the Tokelau Islands on October 5th, after an expected voyage of 41 and 48 hours. On October 6th, a message from Fakaofo port reported that the ship was overdue. No distress signal from the crew was ever received. A search-and-rescue mission was launched and, from October 6th to 12th, Sunderlands of the Royal New Zealand Air Force covered a probability area of nearly 100,000 square miles of ocean, but no sign of Joyita or any of her passengers or crew was found. Five weeks later, on November 10th, Gerald Douglas, captain of the merchant ship Tuvalu, en route from Suva to Funafuti, sighted Joyita more than 600 miles west from her scheduled route, drifting north of Vanua Levu. The ship was partially submerged and listing heavily (her port deck rail was awash) and there was no trace of any of the passengers or crew. Also missing was her four tons of cargo. The recovery party noted that the radio was discovered tuned to 2182 kHz, the international marine radiotelephone distress channel. No sign of the passengers or crew was ever found.

surgeon on his way to perform an amputation), a copra buyer, and two children. Her cargo consisted of medical supplies, timber, 80 empty 45-gallon oil drums, and various foodstuffs. Joyita was scheduled to arrive in the Tokelau Islands on October 5th, after an expected voyage of 41 and 48 hours. On October 6th, a message from Fakaofo port reported that the ship was overdue. No distress signal from the crew was ever received. A search-and-rescue mission was launched and, from October 6th to 12th, Sunderlands of the Royal New Zealand Air Force covered a probability area of nearly 100,000 square miles of ocean, but no sign of Joyita or any of her passengers or crew was found. Five weeks later, on November 10th, Gerald Douglas, captain of the merchant ship Tuvalu, en route from Suva to Funafuti, sighted Joyita more than 600 miles west from her scheduled route, drifting north of Vanua Levu. The ship was partially submerged and listing heavily (her port deck rail was awash) and there was no trace of any of the passengers or crew. Also missing was her four tons of cargo. The recovery party noted that the radio was discovered tuned to 2182 kHz, the international marine radiotelephone distress channel. No sign of the passengers or crew was ever found.

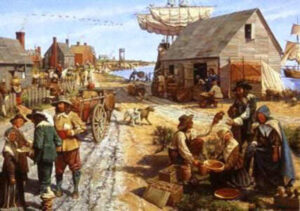

If you travel to a different area of the country or even other English-speaking areas of the world, you will find that here are different accents and even that words are used differently. Still, just because you are visiting or move to those places, doesn’t mean that you will immediately take on those accents, or their use of words. Nevertheless, when you move to a different region, your use of the language does immediately begin to evolve, whether you realize it or not, and whether it is intentional or not. The first Englishmen to set foot on American soil with the intent to colonize the land were no exception. The language began to evolve almost immediately….and it remains a fluid, almost living process to this day. “Americanisms” have been created or changed from other English terms to produce a language that very much differs from our forefathers, signifying our uniqueness and independence.

If you travel to a different area of the country or even other English-speaking areas of the world, you will find that here are different accents and even that words are used differently. Still, just because you are visiting or move to those places, doesn’t mean that you will immediately take on those accents, or their use of words. Nevertheless, when you move to a different region, your use of the language does immediately begin to evolve, whether you realize it or not, and whether it is intentional or not. The first Englishmen to set foot on American soil with the intent to colonize the land were no exception. The language began to evolve almost immediately….and it remains a fluid, almost living process to this day. “Americanisms” have been created or changed from other English terms to produce a language that very much differs from our forefathers, signifying our uniqueness and independence.

Of course, the people didn’t notice the changes right away, but by 1720, the English colonists began to notice that their language was quite different from that spoken in their Motherland. I’m sure they wondered just how that came to be? Basically, when you hear new “slang” words, and people don’t hear the accents spoken as well, the whole dynamic of the language changes. Also, very formal words like “thee, thou, and such” might become too cumbersome and so they are discarded. Everyone in the colonies knew that English would be our native language by 1790, because when the United States took its first census, there were four million Americans, 90% of whom were descendants of English colonists. So, it made perfect sense.

Nevertheless, it would not be the same as that spoken in Great Britain. The reasons are varied, but the most obvious reason was the sheer distance from England. The main way the language evolved was that over the years, many words were borrowed from the Native Americans, as well as other immigrants from France, Germany, Spain, and other countries. In addition, words that became obsolete “across the pond” continued to be utilized in the colonies. In other cases, words simply had to be created in order to explain the unfamiliar landscape, weather, animals, plants, and living conditions that these early pioneers encountered. By 1790 it was obvious that American English would be a very different language that British English.

The first “official” reference to the “American dialect” was made in 1756 by Samuel Johnson, a year after he published his Dictionary of the English Language. Johnson’s use of the term “American dialect” was not meant to simply explain the differences but rather, was intended as an insult. This “new” language was called “barbarous” and referred to our “Americanisms” as barbarisms. Because of the dissention between England and the Colonies, the British sneering at our language continued for more than a century after the Revolutionary War. They laughed and condemned as unnecessary, hundreds of American terms and phrases, but to our newly independent Americans, they were proud of their “new” American language and considered it to be another badge of independence. In 1789, Noah Webster wrote in his Dissertations on the English Language, “The reasons for American English being different than English English are simple…As an independent nation, our honor requires us to have a system of our own, in language as well as government.” In the eyes of the Colonists, that settled the matter, and when the United States was formed, the new nation was proud to be separated for the “Motherland” and would have it no other way.

Our leaders, including Thomas Jefferson and Benjamin Rush, agreed — it was not only good politics, but it was also sensible. The most atrocious changes to the British were the heavy use of contractions such as ain’t, can’t, don’t, and couldn’t. The feelings of the “rest of the world” didn’t matter to Americans, and the language changed even more during the western movement as numerous Native American and Spanish words became an everyday part of our language. The evolution of the American language continued into the 20th century and really continues even to this day. After World War I, when Americans were in a patriotic and anti-foreign mood, the state of Illinois went so far as to pass an act making the official language of the state the “American language.” In 1923, in the State of Illinois General Assembly, they passed the act stating in part, “The official language of the State of Illinois shall be known hereafter as the ‘American’ language and not as the ‘English’

language. A similar bill was also introduced in the US House of Representatives the same year but died in committee. Ironically, after centuries of forming our ‘own’ language, the English and American versions are once again beginning to blend as movies, songs, electronics, and global traveling bring the two ‘languages’ closer together.”

language. A similar bill was also introduced in the US House of Representatives the same year but died in committee. Ironically, after centuries of forming our ‘own’ language, the English and American versions are once again beginning to blend as movies, songs, electronics, and global traveling bring the two ‘languages’ closer together.”

As its name indicated, “pole sitting is the practice of sitting on top of a pole (such as a flagpole) for extended lengths of time, generally used as a test of endurance.” When you first hear the words, it might generate a picture in your mind of someone trying to sit on the little ball that is on top of most flag poles, but actually, a small platform was typically placed at the top of the pole for the sitter…thankfully. The 1920s fad originated with stunt actor and former sailor Alvin “Shipwreck” Kelly, and it became a fad in the mid-to-late 1920s, but mostly died out after the start of the Great Depression. I suppose that during the Great Depression, there just didn’t seem to be an interest in silliness like that.

As its name indicated, “pole sitting is the practice of sitting on top of a pole (such as a flagpole) for extended lengths of time, generally used as a test of endurance.” When you first hear the words, it might generate a picture in your mind of someone trying to sit on the little ball that is on top of most flag poles, but actually, a small platform was typically placed at the top of the pole for the sitter…thankfully. The 1920s fad originated with stunt actor and former sailor Alvin “Shipwreck” Kelly, and it became a fad in the mid-to-late 1920s, but mostly died out after the start of the Great Depression. I suppose that during the Great Depression, there just didn’t seem to be an interest in silliness like that.

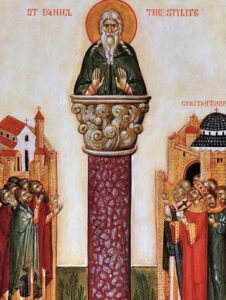

Pole sitting is actually a much older practice than you might think. It was predated by the ancient ascetic discipline of stylitism, which is basically column-sitting. Saint Simeon Stylites the Elder, who lived about 388–459, of Antioch (now Turkey) was a column-sitter who sat on a small platform on a column for 36 years. Why??? I don’t understand the purpose of something like that…at least not in that era. These days it is most likely a way of setting some outlandish record. It seems that getting into the Guiness Book of World Records is a great way of obtaining some recognition.

It seems that something like that was the idea, when 14-year-old William Ruppert broke the pole sitting record of 23 days, in 1929. “Shipwreck” Kelly’s initial 1924 sit lasted 13 hours and 13 minutes. It soon became a big fad with other contestants setting records of 12, 17, and 21 days. In 1929, “Shipwreck” Kelly decided to reclaim the title. He sat on a flagpole for 49 days in Atlantic City, New Jersey, setting a new record. As with all records, the race to top it was on, and the following year, 1930, his record was broken by Bill Penfield in Strawberry Point, Iowa, who sat on a flagpole for 51 days and 20 hours, until a thunderstorm forced him down. Let’s face it while sitting on a pole to break a record was a “fun” idea, it was not something they were willing to lose their lives over. For the most part, pole sitting was confined to the 1920s, ending start of the Depression.

Then, a 37-year-old Ohio resident named Marshall Jacobs, who was trying to revive the fad, married his fiancée Yolanda Cosmar atop a flagpole with a roost in 1946. A wedding photograph of them kissing gained wide attention. In 1949, Cleveland resident Charley Lupica sat atop a flagpole platform for 117 days, to support the Cleveland Indians in their pennant race against the New York Yankees. A serious fan of the Cleveland Indians, Lupica, after an argument with Yankees fans, began sitting on a flagpole above his grocery store on May 31. He claimed he would stay on the pole until the Indians either claimed first place in the standings or were eliminated from contention. Unfortunately for him, the Indians never passed the Yankees, and Lupica came down during the Indian’s final home game on September 25. Indians owner Bill Veeck had moved Lupica and the flagpole to Cleveland Municipal Stadium the night before as a promotional stunt. Richard “Dixie” Blandy claimed various records as champion at 77, 78 between 1933 to 1963. Then, he sat 125 days until he died on May 6, 1974, in Harvey, Illinois when the 50 feet pole on which he was sitting collapsed. Peggy (Townsend) Clark set a record of 217 days in 1964 in Gadsden, Alabama. Pole sitting was also used for other things. From November 1982 to January 21, 1984, a total of 439 days, 11 hours, and 6 minutes, H David Werder sat on a

pole to protest against the price of gasoline. The game show “What’s My Line” hosted by John Charles Daly, got in on the action when a flagpole sitter is the first guest on the July 3, 1955, episode. It even came into the television show, M*A*S*H, when everyone got in on the fun, encouraging Klinger to stick it out, including Colonel Potter learns that the Army record for pole sitting is 96 hours. Potter turned the tables on Klinger by convincing him to stay up there to break the record. Finally, it died out again, unless someone else decides to pick it up again.

pole to protest against the price of gasoline. The game show “What’s My Line” hosted by John Charles Daly, got in on the action when a flagpole sitter is the first guest on the July 3, 1955, episode. It even came into the television show, M*A*S*H, when everyone got in on the fun, encouraging Klinger to stick it out, including Colonel Potter learns that the Army record for pole sitting is 96 hours. Potter turned the tables on Klinger by convincing him to stay up there to break the record. Finally, it died out again, unless someone else decides to pick it up again.

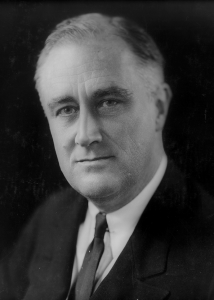

What was known as the Yalta Conference, was held February 4 – 11, 1945. It was a meeting of the heads of government of the United States, the United Kingdom, and the Soviet Union, during World War II, to discuss the postwar reorganization of Germany and Europe, because it was becoming more and more evident that the war was winding down, and the Allies would be the victors. Representing the United States was President Franklin D Roosevelt. Prime Minister Winston Churchill represented the United Kingdom, and the Soviet Union was represented by General Secretary Joseph Stalin. Yalta is located in Crimea, Soviet Union, within the Livadia, Yusupov, and Vorontsov palaces.

What was known as the Yalta Conference, was held February 4 – 11, 1945. It was a meeting of the heads of government of the United States, the United Kingdom, and the Soviet Union, during World War II, to discuss the postwar reorganization of Germany and Europe, because it was becoming more and more evident that the war was winding down, and the Allies would be the victors. Representing the United States was President Franklin D Roosevelt. Prime Minister Winston Churchill represented the United Kingdom, and the Soviet Union was represented by General Secretary Joseph Stalin. Yalta is located in Crimea, Soviet Union, within the Livadia, Yusupov, and Vorontsov palaces.

As a war comes to a close, there must be some kind of a postwar strategy for shaping how the defeated nation will move into peacetime. They cannot be allowed to continue in the same direction that led to war in the first place, and the nations who won the victory will be key players in the newly formed alliance. They needed to shape a postwar peace that represented not only a collective security order, but also a plan to give self-determination to the liberated peoples of Europe, following years of oppression. The conference was intended mainly to discuss the re-establishment of the nations of war-torn Europe. Unfortunately, within a few years, and with the Cold War dividing the continent, the conference became a subject of intense controversy. There was a total of three conferences during World War II. They were known as the Big Three, and Yalta was the second one. The first was the Tehran Conference in November 1943 and the third was the Potsdam Conference in July of the same year as the Yalta Conference…1945. It was also preceded by a conference in Moscow in October 1944, not attended by Roosevelt, in which Churchill and Stalin had spoken about Western and Soviet spheres of influence in Europe.

Finally, on February 11, 1945, after a week of intensive bargaining by the three leaders of the Allied powers, the conference in Yalta ended. While the Yalta Conference was in session, the Western Allies liberated all of France and Belgium and were fighting on the western border of Germany. In the east, Soviet forces were 40 miles from Berlin, having already pushed back the Germans from Poland, Romania, and Bulgaria. These operations proved without a doubt that a German defeat was imminent. So, the focus moved toward shaping postwar Europe. With victory over Germany three months away, Churchill and Stalin were more intent on dividing Europe into zones of political influence than in addressing military considerations. Germany was to be divided into four occupation zones administered by the three major powers and France and was to be thoroughly demilitarized and its war criminals brought to trial. The Soviets were given the duty to administer those European countries they liberated but promised to hold free elections. The British and Americans would oversee the transition to democracy in countries such as Italy, Austria and Greece. Final plans were made for the establishment of the United Nations. A charter conference was scheduled for April in San Francisco.

President Roosevelt, just two months from his death, concentrated his efforts on gaining Soviet support for the United States war effort against Japan. The secret United States atomic bomb project had not yet tested a weapon, and it was estimated that an amphibious attack against Japan could cost hundreds of thousands of American lives. Stalin agreed, after being assured of an occupation zone in Korea, and possession of Sakhalin Island and other territories historically disputed between Russia and Japan, to enter the Pacific War within two to three months of Germany’s surrender. Most of the Yalta accords remained secret until after World War II, and the items that were revealed, such as “Allied plans for Germany and the United Nations, were generally applauded. Roosevelt returned to the United States exhausted, and when he went to address the U.S. Congress

on Yalta, he was no longer strong enough to stand with the support of braces. In that speech, he called the conference ‘a turning point, I hope, in our history, and therefore in the history of the world.'” Unfortunately, he was too sick to recover now. Roosevelt did not live long enough to see the iron curtain drop along the lines of division laid out at Yalta. In April, he traveled to his cottage in Warm Springs, Georgia, to rest and on April 12 died of a cerebral hemorrhage.

on Yalta, he was no longer strong enough to stand with the support of braces. In that speech, he called the conference ‘a turning point, I hope, in our history, and therefore in the history of the world.'” Unfortunately, he was too sick to recover now. Roosevelt did not live long enough to see the iron curtain drop along the lines of division laid out at Yalta. In April, he traveled to his cottage in Warm Springs, Georgia, to rest and on April 12 died of a cerebral hemorrhage.

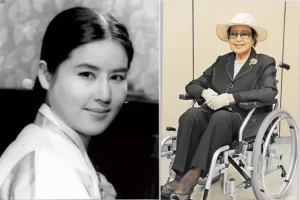

It is no secret that dictators do as they please, and they don’t care what anyone around them thinks of what they do. That is why so many people try to escape the countries run by a dictator. The things they do are often violent and horrific, but sometimes they can be totally bizarre too. One of the strangest acts was performed not by Kim il-Sung, who was dictator in 1978, but rather by his son, Kim Jong-il, who assumed, and rightly so, that he could also do what he wanted to. Kim Jong-il was a film buff, as well as the future North Korean dictator. Kim Jong-il decided that he wanted to make a really good movie. As any director knows, in order to make a really good movie, one must have a really good actor. Kim Jong-il knew exactly who he wanted, but rather than be bothered by the process of hiring the best actor, he simply kidnapped the one he wanted…Choi Eun-hee, as well as her ex-husband, director Shin Sang-ok. Kim Jong-il kept the pair in North Korea for several years, forcing them to make movies, including his very own version of Godzilla, Pulgasari. They now had no choice but to comply with his wishes, or they would die.

It is no secret that dictators do as they please, and they don’t care what anyone around them thinks of what they do. That is why so many people try to escape the countries run by a dictator. The things they do are often violent and horrific, but sometimes they can be totally bizarre too. One of the strangest acts was performed not by Kim il-Sung, who was dictator in 1978, but rather by his son, Kim Jong-il, who assumed, and rightly so, that he could also do what he wanted to. Kim Jong-il was a film buff, as well as the future North Korean dictator. Kim Jong-il decided that he wanted to make a really good movie. As any director knows, in order to make a really good movie, one must have a really good actor. Kim Jong-il knew exactly who he wanted, but rather than be bothered by the process of hiring the best actor, he simply kidnapped the one he wanted…Choi Eun-hee, as well as her ex-husband, director Shin Sang-ok. Kim Jong-il kept the pair in North Korea for several years, forcing them to make movies, including his very own version of Godzilla, Pulgasari. They now had no choice but to comply with his wishes, or they would die.

Choi was born in Gwangju, Gyeonggi Province, in 1926. Her first acting role was in the 1947 film “A New Oath.” She rose to fame the following year after starring in the 1948 film “The Sun of Night”  and soon became known as one of the “troika” of Korean film, alongside actresses Kim Ji-mee and Um Aing-ran. She later married the director Shin Sang-ok in 1954, the two founded Shin Film. Her career flourished, and she went on to act in over 130 films. She was considered one of the biggest stars of South Korean film in the 1960s and 1970s. Due to her fame, she starred in many of Shin’s iconic films including 1958’s “A Flower in Hell” and 1961’s “The Houseguest and My Mother.” he one sadness in their lives was that she could not have children, so the couple adopted two children together, Jeong-kyun and Myung-kim. Choi divorced Shin after hearing that he had fathered two children with a young actress.

and soon became known as one of the “troika” of Korean film, alongside actresses Kim Ji-mee and Um Aing-ran. She later married the director Shin Sang-ok in 1954, the two founded Shin Film. Her career flourished, and she went on to act in over 130 films. She was considered one of the biggest stars of South Korean film in the 1960s and 1970s. Due to her fame, she starred in many of Shin’s iconic films including 1958’s “A Flower in Hell” and 1961’s “The Houseguest and My Mother.” he one sadness in their lives was that she could not have children, so the couple adopted two children together, Jeong-kyun and Myung-kim. Choi divorced Shin after hearing that he had fathered two children with a young actress.

Choi’s career began to suffer after her divorce, and she traveled to Hong Kong in 1978 to meet with a person  posing as a businessman who offered to set up a new film company with her. While she was in Hong Kong, Choi was abducted and taken to North Korea by the order of Kim Jong-il. Shin began a frantic search for Choi, but while searching for her, Shin was also abducted and taken to North Korea. So began years in captivity from which they finally escaped in 1986, during a press conference in Vienna!! They were in Vienna for a film festival. They fled to the US embassy and requested political asylum. Following their escape, they lived in Reston, Virginia, then Beverly Hills, California, before finally returning to South Korea in 1999. Choi died of kidney disease April 16, 2018. She was 91.

posing as a businessman who offered to set up a new film company with her. While she was in Hong Kong, Choi was abducted and taken to North Korea by the order of Kim Jong-il. Shin began a frantic search for Choi, but while searching for her, Shin was also abducted and taken to North Korea. So began years in captivity from which they finally escaped in 1986, during a press conference in Vienna!! They were in Vienna for a film festival. They fled to the US embassy and requested political asylum. Following their escape, they lived in Reston, Virginia, then Beverly Hills, California, before finally returning to South Korea in 1999. Choi died of kidney disease April 16, 2018. She was 91.

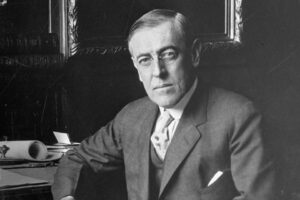

The SS California, owned by Anchor Line Steamship Company; a Scottish merchant shipping company that was founded in 1855 and dissolved in 1980; departed New York on January 29, 1917, bound for Glasgow, Scotland, with 205 passengers and crewmembers on board. While the trip should have been a pleasant journey, world events would soon happen that would change everything in an instant. On February 3, 1917, United States President Woodrow Wilson gave a speech in which he “broke diplomatic relations with Germany and warned that war would follow if American interests at sea were again assaulted.” Of course, all ship sailing the seas, especially those departing or arriving in the United States, or any that had US passengers were warned about the possibility of a German attack.

The SS California, owned by Anchor Line Steamship Company; a Scottish merchant shipping company that was founded in 1855 and dissolved in 1980; departed New York on January 29, 1917, bound for Glasgow, Scotland, with 205 passengers and crewmembers on board. While the trip should have been a pleasant journey, world events would soon happen that would change everything in an instant. On February 3, 1917, United States President Woodrow Wilson gave a speech in which he “broke diplomatic relations with Germany and warned that war would follow if American interests at sea were again assaulted.” Of course, all ship sailing the seas, especially those departing or arriving in the United States, or any that had US passengers were warned about the possibility of a German attack.

February 7, 1917, found the SS California some 38 miles off the coast of Fastnet, Ireland, when the ship’s captain, John Henderson, spotted a submarine off his ship’s port side at a little after 9am. I can only imagine the sinking feeling the captain must have felt at that moment. The Germans were not known for any kind of compassion, and they didn’t particularly care if this was a passenger ship. They figured that the ship might be carrying weapons, and they actually might have been. Captain Henderson ordered the gunner at the stern of the ship to fire in defense, if necessary. Unfortunately, there would not be time to do so, because moments later and without warning, the submarine fired two torpedoes at the ship. The first torpedo missed, but the second torpedo exploded into the port side of the steamer, killing five people instantly. The explosion of that torpedo was so violent and devastating that it caused the 470-foot, 9,000-ton steamer to sink just nine minutes later. The crew quickly sent desperate S.O.S. calls, but the best they could hope for was a hasty arrival of rescue ships. Time was simply not on their side, as 38 people drowned after the initial explosion, and with the initial 5 who died when the torpedo impacted the ship, a total of 43 died. It was an act of war by the Germans.

The Germans were known for this type of blatant attack, in complete defiance of Wilson’s warnings. It’s almost as if they were simply crazed with hatred. Because of Wilson’s warnings about the consequences of unrestricted submarine warfare and the subsequent discovery and release of the Zimmermann telegram, the Germans reached out to the foreign minister to the Mexican government involving a possible Mexican-German alliance in the event of a war between Germany and the United States. That caused Wilson and the United States to take the final steps towards war. On April 2, 1917, Wilson delivered his war message before Congress. It was this action that brought about the United States’ entrance into the First World War, which came about just four days later.

The Germans were known for this type of blatant attack, in complete defiance of Wilson’s warnings. It’s almost as if they were simply crazed with hatred. Because of Wilson’s warnings about the consequences of unrestricted submarine warfare and the subsequent discovery and release of the Zimmermann telegram, the Germans reached out to the foreign minister to the Mexican government involving a possible Mexican-German alliance in the event of a war between Germany and the United States. That caused Wilson and the United States to take the final steps towards war. On April 2, 1917, Wilson delivered his war message before Congress. It was this action that brought about the United States’ entrance into the First World War, which came about just four days later.